前面把基础的 CI/CD 流水线跑通后,我又花了几天时间折腾了一些进阶功能。这篇记录一下代码扫描、全链路监控、灰度发布这些东西,以及中途遇到的一些坑。

从一个 Go 项目开始

之前用的都是现成的 Nginx 镜像,这次想搞点真实的应用,就写了个简单的 Go Web 服务。

创建项目

我本地的 Go 环境是 1.25,直接开干:

mkdir go-demo-appcd go-demo-appgo mod init go-demo-app

go get github.com/prometheus/client_golang/prometheus/promhttpgo mod tidy写代码

main.go 很简单,就三个接口:

package main

import ( "fmt" "net/http" "os"

"github.com/prometheus/client_golang/prometheus/promhttp")

var version = "v1.0.0"

func main() { // 主页面 http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) { hostname, _ := os.Hostname() fmt.Printf("Received request from %s\n", r.RemoteAddr) fmt.Fprintf(w, "<h1>Go Demo App</h1>") fmt.Fprintf(w, "<div>Version: <strong>%s</strong></div>", version) fmt.Fprintf(w, "<div>Hostname: %s</div>", hostname) })

// 健康检查 http.HandleFunc("/health", func(w http.ResponseWriter, r *http.Request) { w.WriteHeader(200) w.Write([]byte("ok")) })

// Prometheus 监控指标 http.Handle("/metrics", promhttp.Handler())

fmt.Printf("Starting server on port 8080, version: %s\n", version) if err := http.ListenAndServe(":8080", nil); err != nil { fmt.Printf("Error starting server: %s\n", err) }}Dockerfile

用的是多阶段构建,最终镜像只有十几 MB:

# 构建阶段FROM reg.westos.org/library/golang:1.25-alpine AS builder

WORKDIR /app

# Go 代理加速ENV GOPROXY=https://goproxy.cn,direct

COPY go.mod go.sum ./RUN go mod download

COPY . .RUN CGO_ENABLED=0 GOOS=linux go build -o go-demo-app .

# 运行阶段FROM reg.westos.org/library/alpine:latest

WORKDIR /root/

COPY --from=builder /app/go-demo-app .

EXPOSE 8080

CMD ["./go-demo-app"]因为 Kaniko 访问不了 DockerHub,我提前把基础镜像推到了 Harbor:

docker pull golang:1.25-alpinedocker pull alpine:latest

docker login reg.westos.org -u admin -p 12345

docker tag golang:1.25-alpine reg.westos.org/library/golang:1.25-alpinedocker tag alpine:latest reg.westos.org/library/alpine:latest

docker push reg.westos.org/library/golang:1.25-alpinedocker push reg.westos.org/library/alpine:latest推送到 Gitea

在 Gitea 创建 go-demo-app 仓库,然后推上去:

git initgit add .git commit -m "Initial commit: Go app with metrics"git branch -M maingit remote add origin http://192.168.100.10:30030/admin/go-demo-app.gitgit push -u origin main配置 Jenkins Pipeline

在仓库根目录新建 Jenkinsfile,用来自动构建镜像:

pipeline { agent { kubernetes { yaml ''' apiVersion: v1 kind: Pod spec: hostAliases: - ip: "192.168.100.14" hostnames: - "reg.westos.org" containers: - name: kaniko image: gcr.io/kaniko-project/executor:debug command: - sleep - infinity volumeMounts: - name: registry-creds mountPath: /kaniko/.docker/ volumes: - name: registry-creds secret: secretName: harbor-auth items: - key: .dockerconfigjson path: config.json ''' } }

environment { IMAGE_REPO = "reg.westos.org/library/go-demo-app" IMAGE_TAG = "v1.0.${BUILD_NUMBER}" }

stages { stage('Checkout') { steps { checkout scm } }

stage('Build & Push') { steps { container('kaniko') { sh """ /kaniko/executor \ --context `pwd` \ --dockerfile `pwd`/Dockerfile \ --destination ${IMAGE_REPO}:${IMAGE_TAG} \ --destination ${IMAGE_REPO}:latest \ --skip-tls-verify \ --insecure """ } } }

stage('Update Manifest') { steps { echo "TODO: Update ArgoCD manifest with new tag ${IMAGE_TAG}" } } }}在 Jenkins 新建一个 Pipeline 任务,选择 “Pipeline from SCM”,指向 Gitea 的 go-demo-app 仓库,分支选 main。

跑一次构建,去 Harbor 看看镜像有没有生成。

准备 K8s 部署文件

在 go-demo-app 仓库里新建 deploy/deployment.yaml:

apiVersion: apps/v1kind: Deploymentmetadata: name: go-demo-app namespace: default labels: app: go-demo-appspec: replicas: 1 selector: matchLabels: app: go-demo-app template: metadata: labels: app: go-demo-app annotations: prometheus.io/scrape: "true" prometheus.io/port: "8080" prometheus.io/path: "/metrics" spec: hostAliases: - ip: "192.168.100.14" hostnames: - "reg.westos.org" containers: - name: go-demo-app image: reg.westos.org/library/go-demo-app:latest imagePullPolicy: Always ports: - containerPort: 8080 resources: limits: cpu: "500m" memory: "128Mi" readinessProbe: httpGet: path: /health port: 8080 initialDelaySeconds: 5 periodSeconds: 10 imagePullSecrets: - name: harbor-auth

---apiVersion: v1kind: Servicemetadata: name: go-demo-app-svc namespace: default labels: app: go-demo-appspec: type: NodePort selector: app: go-demo-app ports: - name: http port: 8080 targetPort: 8080 nodePort: 30095推送到 Gitea,然后在 ArgoCD 创建新应用 go-demo-app:

- Repository URL:

http://192.168.100.10:30030/admin/go-demo-app.git - Path:

deploy - Sync Policy: Automatic,勾选 Prune 和 Self Heal

同步后访问 http://192.168.100.10:30095,能看到页面就算成功了。

实现 CI 闭环

现在 Jenkins 每次构建出新镜像,还需要手动改 deployment.yaml 里的版本号,这太蠢了。我们让 Jenkins 自己去改。

修改 Jenkinsfile

主要改动是加一个 git-tools 容器,用来提交代码:

pipeline { agent { kubernetes { yaml ''' apiVersion: v1 kind: Pod spec: hostAliases: - ip: "192.168.100.14" hostnames: - "reg.westos.org" containers: - name: kaniko image: gcr.io/kaniko-project/executor:debug command: - sleep - infinity volumeMounts: - name: registry-creds mountPath: /kaniko/.docker/ - name: git-tools image: bitnami/git:latest command: - sleep - infinity volumes: - name: registry-creds secret: secretName: harbor-auth items: - key: .dockerconfigjson path: config.json ''' } }

environment { IMAGE_REPO = "reg.westos.org/library/go-demo-app" IMAGE_TAG = "v1.0.${BUILD_NUMBER}" GITEA_REPO = "192.168.100.10:30030/admin/go-demo-app.git" }

stages { stage('Checkout') { steps { checkout scm } }

stage('Build & Push') { steps { container('kaniko') { sh """ /kaniko/executor \ --context `pwd` \ --dockerfile `pwd`/Dockerfile \ --destination ${IMAGE_REPO}:${IMAGE_TAG} \ --destination ${IMAGE_REPO}:latest \ --skip-tls-verify \ --insecure """ } } }

stage('Update Manifest') { steps { container('git-tools') { withCredentials([usernamePassword(credentialsId: 'gitea-auth', usernameVariable: 'GIT_USER', passwordVariable: 'GIT_PASS')]) { sh """ git config --global user.email "jenkins@westos.org" git config --global user.name "Jenkins CI" git config --global --add safe.directory '*'

git checkout main git pull origin main

sed -i "s|image: ${IMAGE_REPO}:.*|image: ${IMAGE_REPO}:${IMAGE_TAG}|" deploy/deployment.yaml

echo "Updated deployment.yaml:" grep "image:" deploy/deployment.yaml

if git status --porcelain | grep deploy/deployment.yaml; then git add deploy/deployment.yaml git commit -m "Deploy: update image tag to ${IMAGE_TAG} [skip ci]" git push http://${GIT_USER}:${GIT_PASS}@${GITEA_REPO} main else echo "No changes to commit" fi """ } } } } }}推送更新后跑一次构建,去 Gitea 看看 deployment.yaml 有没有自动更新版本号。如果有,去 ArgoCD 看 Pod 有没有重启。这样 CI 闭环就通了。

接入代码扫描 (SonarQube)

为了显得专业一点,加个代码扫描。

安装 SonarQube

helm repo add sonarqube https://SonarSource.github.io/helm-chart-sonarqubehelm repo update

kubectl create namespace sonarqube

cat <<EOF > sonar-values.yamlcommunity: enabled: true

service: type: NodePort nodePort: 30099

persistence: enabled: true storageClass: "nfs-client" size: 5Gi

elasticsearch: configureNode: false

monitoringPasscode: "westos_monitor_123"EOF

helm install sonarqube sonarqube/sonarqube -n sonarqube -f sonar-values.yaml

kubectl get pods -n sonarqube -wSonarQube 启动比较慢,等个 2-3 分钟。

配置 SonarQube

访问 http://192.168.100.10:30099,默认账号 admin/admin,首次登录要改密码,我改成了 Admin123456789!。

创建项目:

- Create a local project

- Project display name:

go-demo-app - Project Key:

go-demo-app - Main branch name:

main - 选 “Follows the instance’s default”,点 Create

然后生成 Token,选 “Locally”,点 Generate,复制这个 Token(长这样:sqp_xxxx...)。

配置 Jenkins

- 安装插件:SonarQube Scanner

- 添加凭证:

- Kind: Secret text

- Secret: 粘贴刚才的 Token

- ID:

sonar-token

- 配置系统:

- Manage Jenkins → System → SonarQube servers

- 勾选 “Environment variables”

- Name:

sonar-server - Server URL:

http://sonarqube-sonarqube.sonarqube.svc.cluster.local:9000 - Token: 选

sonar-token

修改 Jenkinsfile

在 Pod Template 里加一个 sonar-cli 容器,然后在 Build 之前加一个 Code Analysis 阶段:

// Pod Template 里加这个容器- name: sonar-cli image: sonarsource/sonar-scanner-cli:latest command: - sleep - infinity

// stages 里加这个阶段stage('Code Analysis') { steps { container('sonar-cli') { withSonarQubeEnv('sonar-server') { sh """ sonar-scanner \ -Dsonar.projectKey=go-demo-app \ -Dsonar.sources=. \ -Dsonar.host.url=http://sonarqube-sonarqube.sonarqube.svc.cluster.local:9000 \ -Dsonar.login=$SONAR_AUTH_TOKEN """ } } }}跑一次构建,成功后去 SonarQube 网页看看有没有扫描报告。

资源优化

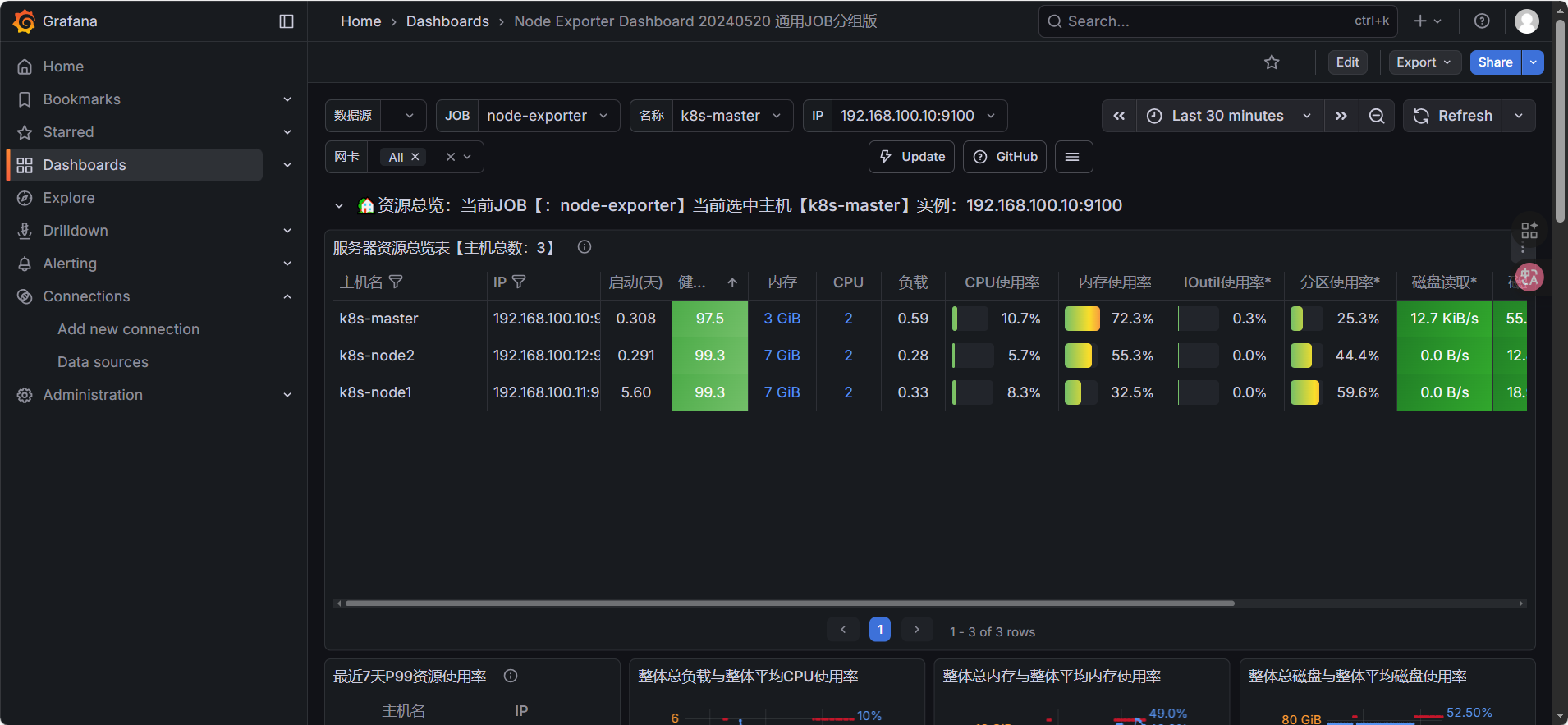

这时候我发现集群有点撑不住了,看了下节点资源:

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

kubectl patch deployment metrics-server -n kube-system --type='json' -p='[{"op": "add", "path": "/spec/template/spec/containers/0/args/-", "value": "--kubelet-insecure-tls"}]'

kubectl top nodes结果:

NAME CPU(cores) CPU(%) MEMORY(bytes) MEMORY(%)k8s-master 134m 6% 1227Mi 74%k8s-node1 133m 6% 5524Mi 73%k8s-node2 59m 2% 856Mi 11%node1 快累死了,node2 在偷懒。再看看 Pod:

kubectl top pods -A --sort-by=memoryNAMESPACE NAME CPU(cores) MEMORY(bytes)sonarqube sonarqube-sonarqube-0 19m 2186Mijenkins jenkins-0 2m 1495Mikube-system kube-apiserver-k8s-master 31m 494Mi问题很明显:资源分配不均,而且 SonarQube 和 Jenkins 太吃内存了。

调整资源分配

先把 node1 禁止调度,强制 SonarQube 和 Jenkins 重建到 node2:

kubectl cordon k8s-node1

kubectl delete pod sonarqube-sonarqube-0 -n sonarqubekubectl delete pod -n jenkins -l app.kubernetes.io/name=jenkins

# 确认跑到 node2 了kubectl -n sonarqube get pod -o widekubectl -n jenkins get pod -o wide

# 解封kubectl uncordon k8s-node1再看节点指标,平衡多了:

NAME CPU(cores) CPU(%) MEMORY(bytes) MEMORY(%)k8s-master 130m 6% 1277Mi 77%k8s-node1 68m 3% 1440Mi 19%k8s-node2 145m 7% 883Mi 11%限制内存占用

修改 sonar-values.yaml,加上资源限制:

resources: requests: cpu: "200m" memory: "1Gi" limits: cpu: "1000m" memory: "1800Mi"

jvmCeOpts: "-Xmx1024m -Xms512m"jvmOpts: "-Xmx1024m -Xms512m"更新:

helm upgrade sonarqube sonarqube/sonarqube -n sonarqube -f sonar-values.yaml修改 jenkins-manual.yaml:

controller: javaOpts: "-Xms512m -Xmx800m -Djenkins.install.runSetupWizard=false"

resources: requests: cpu: "200m" memory: "512Mi" limits: cpu: "1000m" memory: "1280Mi"更新:

helm upgrade jenkins jenkins/jenkins -n jenkins -f jenkins-manual.yaml再看内存占用:

NAMESPACE NAME CPU(cores) MEMORY(bytes)sonarqube sonarqube-sonarqube-0 913m 767Mikube-system kube-apiserver-k8s-master 53m 530Mijenkins jenkins-0 945m 328Mi优化效果很明显,虽然还是有点卡,但至少不会整个节点崩溃了。

全链路监控

接下来搞监控,用 Prometheus + Grafana + Loki。

部署 kube-prometheus-stack

cat <<EOF > monitor-values.yamlgrafana: service: type: NodePort nodePort: 30000

prometheus: prometheusSpec: retention: 5d resources: requests: memory: 512Mi cpu: 200m limits: memory: 1Gi cpu: 1000m serviceMonitorSelectorNilUsesHelmValues: false serviceMonitorSelector: {} serviceMonitorNamespaceSelector: {}

alertmanager: alertmanagerSpec: replicas: 1

kubeStateMetrics: enabled: truenodeExporter: enabled: trueEOF

helm repo add prometheus-community https://prometheus-community.github.io/helm-chartshelm repo update

kubectl create ns monitoring

helm install prometheus prometheus-community/kube-prometheus-stack \ -n monitoring \ -f monitor-values.yaml

kubectl get pods -n monitoring -w监控 Jenkins

在 Jenkins 安装插件 Prometheus metrics,重启后访问 http://192.168.100.10:30080/prometheus/ 能看到指标就行。

创建 jenkins-monitor.yaml:

apiVersion: monitoring.coreos.com/v1kind: ServiceMonitormetadata: name: jenkins namespace: monitoring labels: release: prometheusspec: selector: matchLabels: app.kubernetes.io/instance: jenkins namespaceSelector: matchNames: - jenkins endpoints: - port: http path: /prometheus/ interval: 15s应用:

kubectl apply -f jenkins-monitor.yaml访问 Grafana

访问 http://192.168.100.10:30000,账号 admin,密码用命令查:

kubectl -n monitoring get secret prometheus-grafana \ -o jsonpath="{.data.admin-password}" | base64 -d ; echo我的密码是:sgbrApYRHjBKbJzdv7YbS1LgGZGqPAToqZ4x1NDj

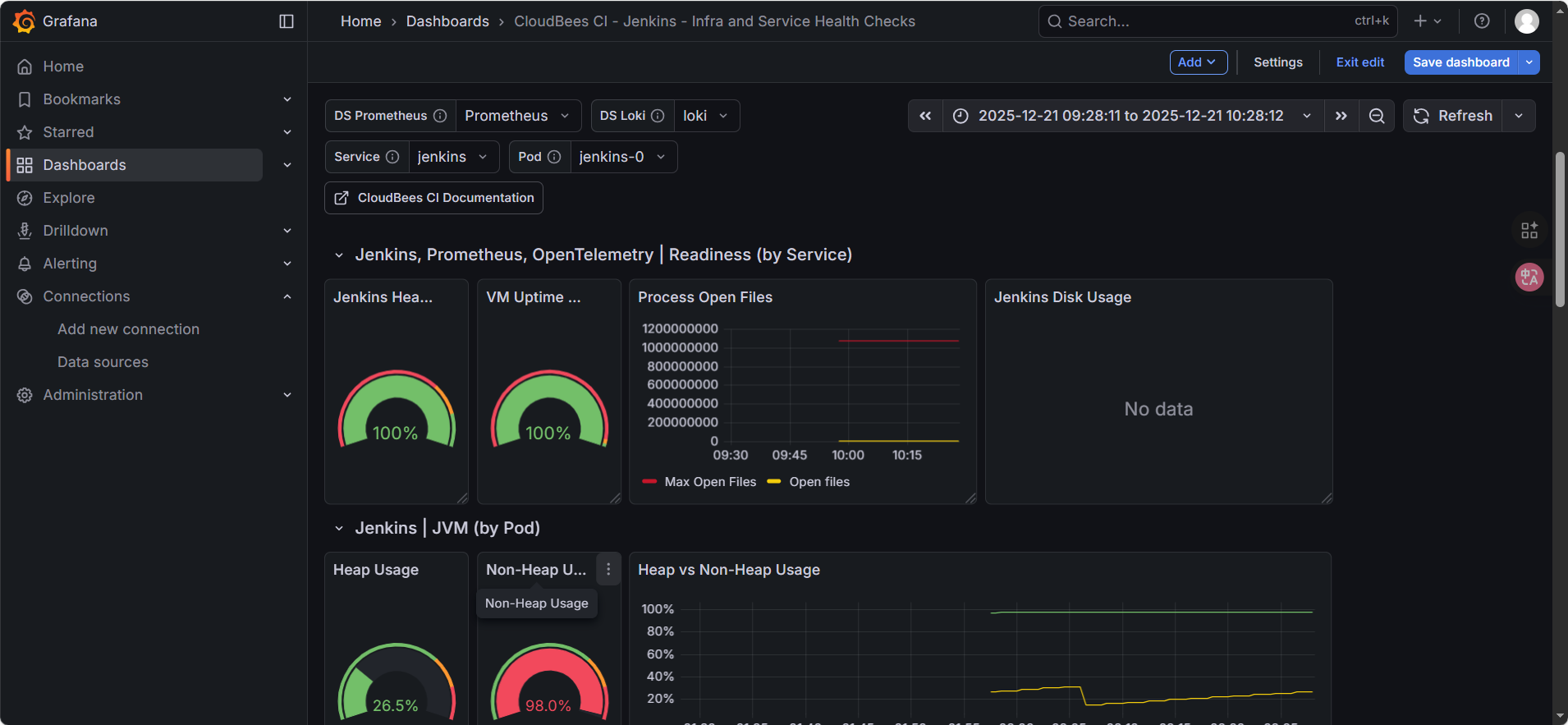

进入 Grafana,左侧菜单 Dashboards → New → Import,输入 Dashboard ID 24357,数据源选 Prometheus,点 Import。

就能看到 Jenkins 的监控大盘了。

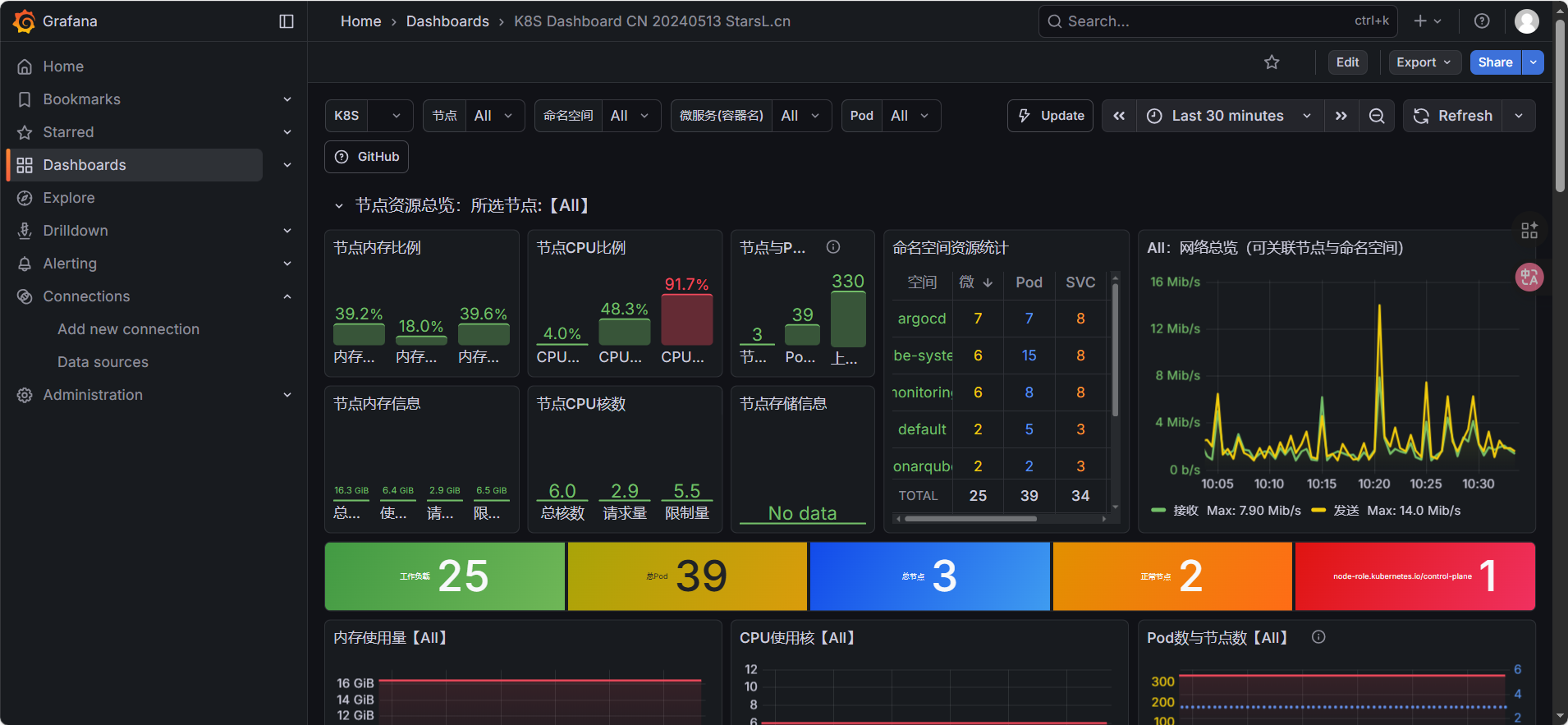

也可以导入 16098 和 13105 看主机和 K8s 集群的信息。

监控 Go 应用

创建 app-monitor.yaml:

apiVersion: monitoring.coreos.com/v1kind: ServiceMonitormetadata: name: go-demo-app namespace: monitoring labels: release: prometheusspec: selector: matchLabels: app: go-demo-app namespaceSelector: matchNames: - default endpoints: - port: http path: /metrics interval: 5s应用:

kubectl apply -f app-monitor.yaml在 Grafana 的 Explore 里搜索 go_goroutines 或 process_cpu_seconds_total,能看到图表就说明成功了。

日志聚合 (Loki)

Grafana 已经装了,只需要装 Loki 和 Promtail。

helm repo add grafana https://grafana.github.io/helm-chartshelm repo update

kubectl create ns logging

cat <<EOF > loki-values.yamlloki: enabled: true

image: repository: grafana/loki tag: "2.9.3" # 默认的 2.6 版本 Grafana 连不上 pullPolicy: IfNotPresent

config: auth_enabled: false

commonConfig: replication_factor: 1

storage: type: filesystem

singleBinary: replicas: 1

resources: requests: cpu: 100m memory: 256Mi limits: memory: 512Mi

promtail: enabled: true config: clients: - url: http://loki:3100/loki/api/v1/pushEOF

helm install loki grafana/loki-stack -n logging -f loki-values.yaml配置 Grafana 数据源

进入 Grafana → Connections → Data Sources → Add data source → 选择 Loki。

URL 填:http://loki.logging.svc.cluster.local:3100

点 Save & Test。

验证日志

进入 Grafana → Explore → 数据源选 Loki。

Label filters 选 namespace = jenkins 或 default,点 Run query。

现在不仅能看到 CPU 曲线,还能在同一个页面看到 Pod 的日志,全链路可视化就实现了。

Argo Rollouts 灰度发布

最后搞个灰度发布,实现无人值守自动上线。

安装 Argo Rollouts

kubectl create namespace argo-rollouts

kubectl apply -n argo-rollouts -f https://github.com/argoproj/argo-rollouts/releases/latest/download/install.yaml

# 安装 kubectl 插件curl -LO https://github.com/argoproj/argo-rollouts/releases/latest/download/kubectl-argo-rollouts-linux-amd64chmod +x ./kubectl-argo-rollouts-linux-amd64mv ./kubectl-argo-rollouts-linux-amd64 /usr/local/bin/kubectl-argo-rollouts

kubectl get pods -n argo-rollouts创建 AnalysisTemplate

在 go-demo-app 仓库的 deploy 目录下,新建 analysis.yaml:

apiVersion: argoproj.io/v1alpha1kind: AnalysisTemplatemetadata: name: success-rate-check namespace: defaultspec: args: - name: service-name metrics: - name: success-rate successCondition: result[0] == 1 provider: prometheus: address: http://prometheus-kube-prometheus-prometheus.monitoring:9090 query: | # 演示用的查询,永远返回 1 # 生产环境应该改成真实的成功率查询 vector(1)将 Deployment 升级为 Rollout

修改 deploy/deployment.yaml,主要改动:

apiVersion改为argoproj.io/v1alpha1kind改为Rolloutreplicas改为5(方便看灰度效果)- 加上

strategy.canary配置

apiVersion: argoproj.io/v1alpha1kind: Rolloutmetadata: name: go-demo-app namespace: default labels: app: go-demo-appspec: replicas: 5 selector: matchLabels: app: go-demo-app strategy: canary: steps: - setWeight: 20 # 先切 20% 流量 - analysis: templates: - templateName: success-rate-check args: - name: service-name value: go-demo-app - pause: {duration: 30s} # 人工观察 30 秒 - setWeight: 50 # 再切 50% 流量 - pause: {duration: 10s} - setWeight: 100 # 最后全量上线 template: metadata: labels: app: go-demo-app annotations: prometheus.io/scrape: "true" prometheus.io/port: "8080" prometheus.io/path: "/metrics" spec: hostAliases: - ip: "192.168.100.14" hostnames: - "reg.westos.org" containers: - name: go-demo-app image: reg.westos.org/library/go-demo-app:latest imagePullPolicy: Always ports: - containerPort: 8080 resources: limits: cpu: "200m" memory: "128Mi" readinessProbe: httpGet: path: /health port: 8080 initialDelaySeconds: 5 periodSeconds: 10 imagePullSecrets: - name: harbor-auth---apiVersion: v1kind: Servicemetadata: name: go-demo-app-svc namespace: default labels: app: go-demo-appspec: type: NodePort selector: app: go-demo-app ports: - name: http port: 8080 targetPort: 8080 nodePort: 30095删除旧的 Deployment:

kubectl delete deployment go-demo-app推送到 Gitea,去 ArgoCD 点 Sync。

模拟灰度发布

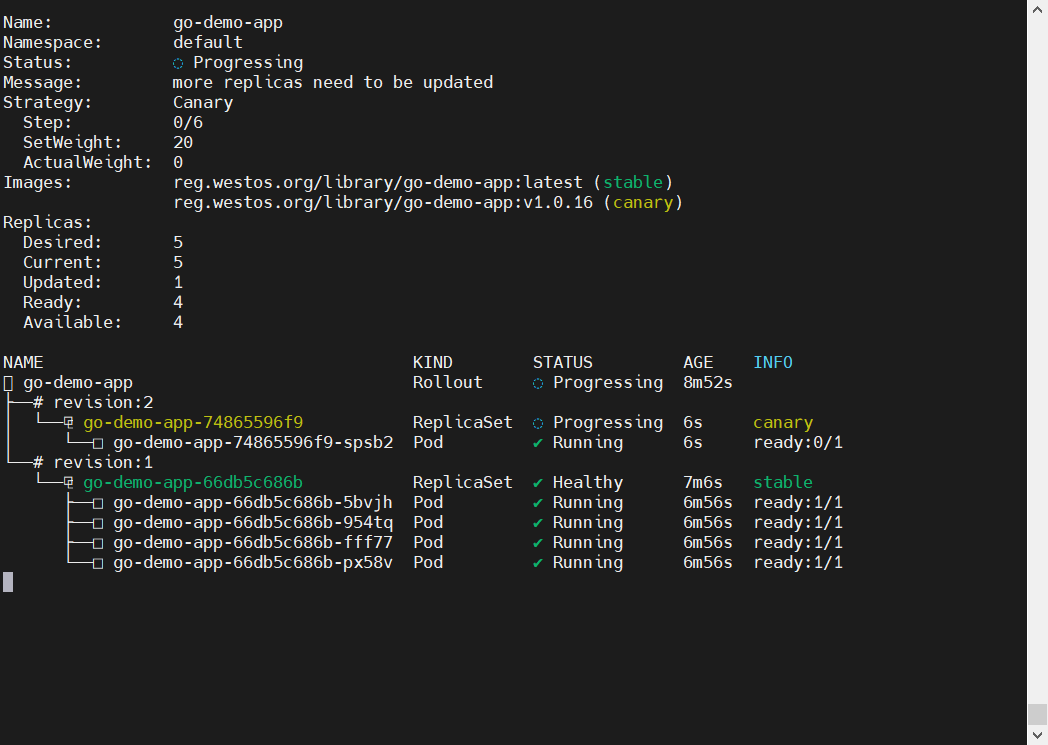

开一个终端窗口,实时监控 Rollout 状态:

kubectl argo rollouts get rollout go-demo-app -n default -w然后去 Jenkins 跑一次构建。

观察过程:

- Step 1: 状态变为 Paused,新版本 Pod 启动 1 个(20%),旧版本 4 个

- Analysis: 后台跑 AnalysisRun,连 Prometheus 查询

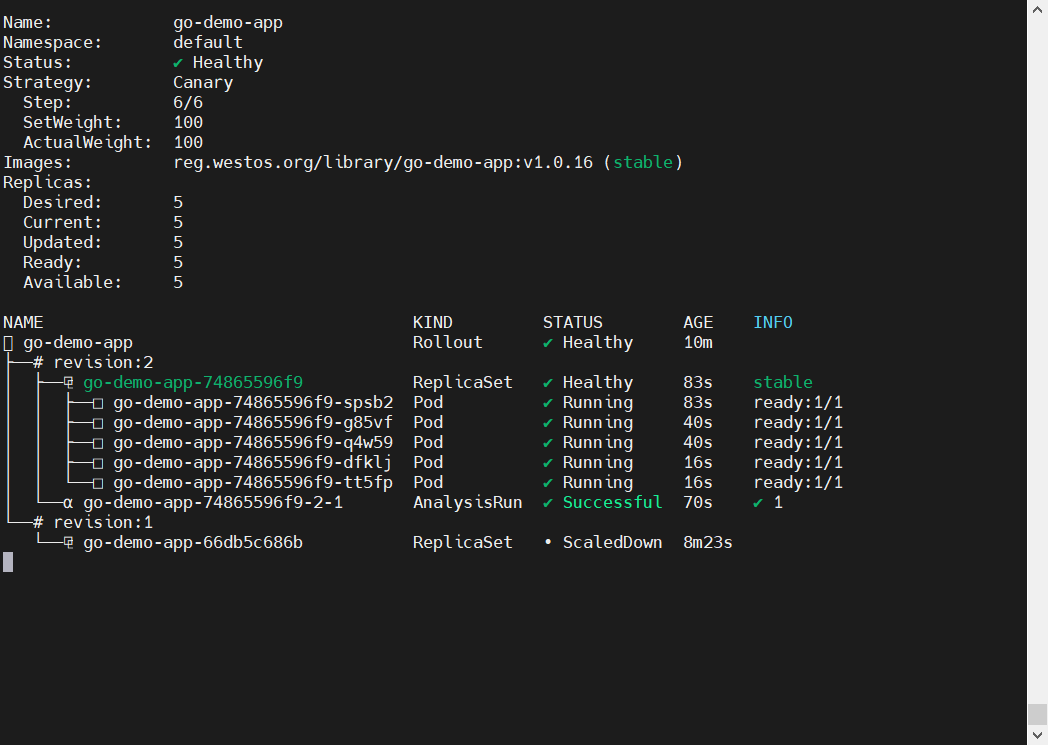

vector(1) - Pass: 如果 Prometheus 连接正常,Analysis 显示 Successful

- Step 2: 权重自动增加到 50%

- Complete: 最终权重变成 100%,旧版本 Pod 全部消失

全部切为新版本

整个过程完全自动化,无需人工介入。这就是 GitOps + 灰度发布的魅力。

小结

折腾了这么多天,从基础的 CI/CD 到代码扫描、全链路监控、灰度发布,整个云原生工具链算是体验了一遍。虽然中途遇到不少资源不足、配置错误的问题,但每次解决问题都能学到新东西。

最大的感受就是:云原生不是银弹,但确实能解决很多传统部署方式解决不了的问题。比如灰度发布,以前要写一堆脚本,现在一个 YAML 就搞定了。